The AI Becker problem

Who will train the next generation?

To pay individuals like $100 million over a four‑year package, that’s actually pretty cheap compared to the value created for the business. — Benjamin Mann, Anthropic Cofounder

Do we need armies of business analysts creating PowerPoints? No, the technology could do that — Kate Smaje, Head of technology and AI, McKinsey and Co.

When Anthropic's cofounder defends nine-figure salaries for AI researchers, he inadvertently highlights a paradox. While Meta and Google engage in bidding wars for senior AI talent — with packages reaching $100 million — the same technology is eliminating the entry-level positions that once created such expertise. Paraphrasing McKinsey head of tech’s statement to Bloomberg above, what is the point of junior analysts?

This creates what I’ll call the 'AI-Becker problem'. Gary Becker identified that companies underinvest in general training because rivals can poach the finished product. In AI-Becker, companies underinvest in general training because junior employees don’t create any value at all.

The traditional economics of professional training

Professional services (and other fields) have long operated as knowledge hierarchies. Consider Goldman Sachs in 2019: 38,000 employees arranged in a pyramid with thousands of analysts building Excel models, a smaller cohort of associates refining presentations, and a tiny cadre of managing directors making strategic decisions. Each level learns by doing routine work for the level above.

This pyramid serves a dual economic purpose. First, it leverages senior expertise efficiently—one partner can supervise ten associates, multiplying their impact. Second, it creates natural training grounds where junior work subsidizes its own learning.

The training economics works to solve what Gary Becker identified as the fundamental problem of human capital investment. In Becker's analysis, firms won't pay to train workers in general skills—the kind transferable beyond the firm—because rivals could poach employees after they're trained. Why would Goldman invest millions training analysts if JPMorgan could later hire them with their newly acquired expertise?

Employers solve this ‘Becker poaching problem’ by structuring entry-level roles so junior employees generate revenue as they learn, letting firms recoup training costs before employees could leave with newly acquired skills. (This is what Luis Rayo and I have termed ‘relational knowledge transfers’.)

A law firm can bill a first-year associate at $400-600 per hour for contract review—revenue that not only covers the associate's salary but also ties them to the firm long enough to develop significant expertise. The associate accepts lower wages upfront in return for skill development over time, while the firm captures immediate value from their work. Training becomes a joint product of billable work rather than a pure cost.

This arrangement works because junior tasks, while routine, still require human judgment and create economic value. Apprenticeships can appear inefficiently long and low-paid precisely because the teacher is receiving immediate productivity in exchange for developing future expertise.1 The novice's routine work subsidizes their own training while providing economic justification for the firm's investment in their development.

How AI destroys the training model

If AI eliminates the junior work that once subsidized learning, it will destroy this carefully balanced training economy. Advances in generative AI mean many routine tasks can now be done faster, better, or entirely by machines—precisely the detail-heavy, repeatable work that juniors historically did to learn their craft.

The legal industry will be one of the first to show this transformation. Law firms can already use o3 to automate contract drafting and due diligence. Allen & Overy’s innovation head noted it will be “a serious competitive disadvantage” for firms not to adopt such AI. These tools review documents in minutes instead of weeks, dramatically compressing junior-level work.

In consulting and finance, Deep Research can handle routine tasks that used to keep teams of junior analysts busy for days. McKinsey has introduced their own AI tool, “Lilli”, which answers half a million queries per month, supposedly saving consultants 30% of time on research tasks. The tool can also do the infamous Power Points that once occupied armies of young minds.

The anecdotal data here is pretty strong; this, from a recent article by New York Times tech columnist Kevin Roose, is not unusual:

One tech executive recently told me his company had stopped hiring anything below an L5 software engineer — a midlevel title typically given to programmers with three to seven years of experience — because lower-level tasks could now be done by A.I. coding tools. Another told me that his start-up now employed a single data scientist to do the kinds of tasks that required a team of 75 people at his previous company.

Evidence of the breakdown

The breakdown of traditional training pathways is already visible in hiring data, although I am not aware of any careful work attributing causality to Artificial Intelligence– hence this is only the weakest type of (time series) correlation.

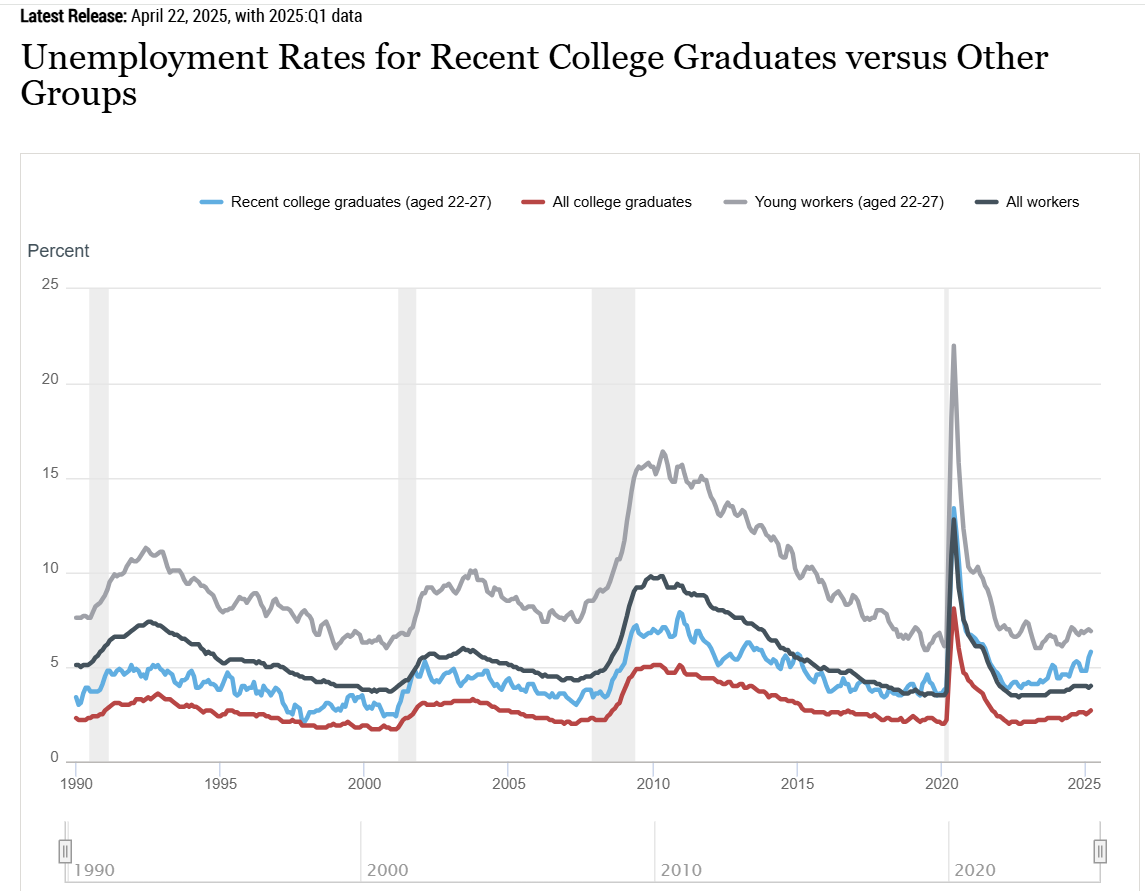

New York Fed data shows unemployment for new college graduates up 30% since the pandemic versus 18% for all workers.

Source: NY Fed

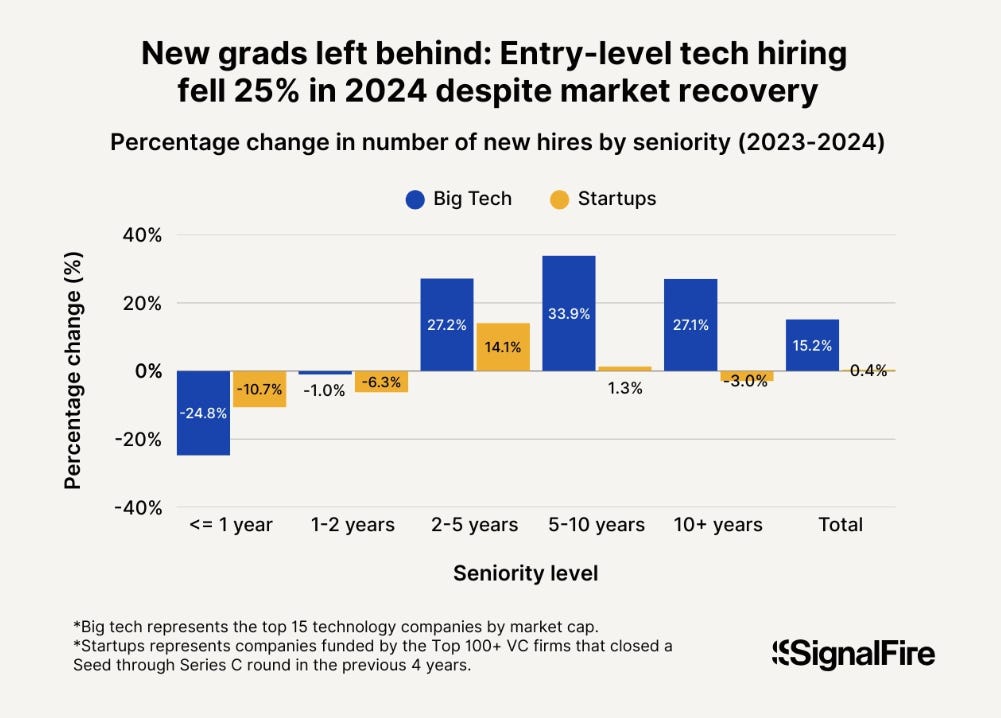

While multiple factors contribute—economic cycles, over-hiring corrections from 2021—AI efficiency appears to be a driver. SignalFire's analysis monitoring 650 million LinkedIn profiles found new graduate hiring declining by 25%.

Source: Signal Fire

The Supervision Threshold

This transformation creates what I have called in ongoing work with Jin Li and Yanhui Wu from HKU the “supervision threshold”—a sharp boundary that divides the world of work into two distinct domains. Below the threshold lie increasingly sophisticated but ultimately routine tasks that AI can execute with minimal human oversight, such as drafting standard NDAs and employment contracts, conducting competitor analysis using public data or writing Python scripts for data cleaning. Those whose skill is below the supervision level compete with AI but cannot supervise it, and hence face the economic pressures of commoditization and the risk of redundancy.

Above it sit meta-cognitive tasks requiring judgment: recognizing when a novel business structure needs custom contract language, knowing when AI-generated code contains subtle security vulnerabilities or realizing when AI "hallucinates" plausible but false information. Those whose skill is above the supervision threshold find that they can use AI as a tool, wielding much greater leverage to scale their judgment across global markets.

This creates an economic shift that explains the two quotations that frame this post. Thanks to AI, a single senior professional can potentially oversee far more output than before. But the traditional "middle rungs" of associates and analysts who once performed this work while learning vanish below them.

The result is the destruction of the joint-product model that made professional training economically viable. If AI platforms handle the routine work, junior employees can no longer bill 2,000 hours annually to subsidize their own training

This exacerbates the Becker poaching problem. If Company A invests time and money to turn a raw college graduate into an expert, Company B can hire that person after five years of experience for a higher salary, collecting the benefits of skills Company A paid to build. In the past, firms tolerated this risk because juniors were producing valuable work along the way. Without that value, the economic foundation of apprenticeship collapses entirely.

New Ladders

Any viable solution to the AI-Becker problem must satisfy three economic constraints simultaneously. First, it must compress the time required to reach the supervision threshold—eliminating the years of routine work that AI now performs while ensuring graduates still acquire the pattern recognition and contextual knowledge that separates competent from expert judgment. Second, it must solve the financing problem: if firms cannot bill junior work to subsidize training, either public policy must create new incentives for private investment in general human capital, or alternative funding mechanisms must emerge. Third, solutions must be robust to technological change—they cannot assume AI capabilities will plateau at current levels, but must prepare workers for a world where the supervision threshold continues to rise.

Governments can shift the incentives. Singapore’s SkillsFuture scheme refunds up to 90 percent of wage cost when firms deliver certified, judgment‑oriented training. These programmes tilt the Becker calculus by sharing the cost of training general skills.

The private sector could develop similar solutions–industry-wide training consortiums where competitors share costs and benefits of developing talent, or public-private partnerships that fund compressed apprenticeship programs in exchange for commitments to domestic employment.

Universities must also adapt. Students need to enter firms already able to do better than perform the work AI replaces. This means we at educational institutions must develop curricula focused on meta-cognitive skills: understanding AI capabilities and limitations, recognizing when human judgment is required, and developing the contextual knowledge that separates routine application from strategic thinking. Students need experience working with AI as a tool while learning to make the judgment calls that remain uniquely human. Curriculum must centre on model diagnostics, causal inference, version control, and sector‑specific domain knowledge—in short, on the meta‑cognition that lives above the supervision threshold.

The challenge ahead is making sure today’s entry-level workers actually get the experiences needed to become tomorrow’s experts, in spite of AI taking over training grounds– and in spite of firms not having the incentives to provide it.

References

Garicano, Luis. “Hierarchies and the Organization of Knowledge in Production.” Journal of Political Economy 108, no. 5 (2000): 874-904.

Garicano, Luis, and Thomas N. Hubbard. “The returns to knowledge hierarchies.” The Journal of Law, Economics, and Organization 32, no. 4 (2016): 653-684.

Garicano, Luis, and Luis Rayo. “Relational knowledge transfers.” American Economic Review 107, no. 9 (2017): 2695-2730.

Garicano, Luis, and Esteban Rossi-Hansberg. “Organization and inequality in a knowledge economy.” The Quarterly journal of economics 121, no. 4 (2006): 1383-1435.

Garicano, Luis, and Esteban Rossi-Hansberg. "Knowledge-based hierarchies: Using organizations to understand the economy." Annual Review of Economics 7, no. 1 (2015): 1-30.

See our analysis of why in the AER 2017 paper (with Luis Rayo) cited above.

AI is also changing the value of training itself. Fundamentally, some skills can be trained and some cannot. When a skill can be clearly defined and clearly evaluated, it’s much easier to train that skill. But this is also the sort of skill that AI is the best at replacing.

If the AI can get an A in a class, what useful skill is a human learning when they get an A in that class? For some classes, the answer will be “nothing”. That skill just won’t have value any more. Like learning to use a card catalog to find books.

A very interesting article. Regarding university: is the current European framework (four years of undergraduate studies plus a master's degree) sufficient, or will this challenge require longer educational careers? In short, are the professional and educational cycles being stretched at the same time as the biological one?